Oh Boy AI

the thing we're all talking about

I’ve had a lot, and I mean a lot, of discussion about AI in the past 3-6 months. It’s been a bit of an emotional rollercoaster, from “hey cool this is neat tech” to “omg is my entire profession going to be wiped out” to “it’s another tool in the box”. Like with all new technologies AI is going through the hype cycle as people find what works, how it incorporates into products and day to day work, and settles into the new normal.

For those who lived through cloud, mobile, and crypto, this all feels really familiar. But neither Cloud nor mobile nor crytpo went away. They just sort of found their place in the world and they’re now standard in a lot of industries and workflows.

I wanted to put some thoughts down about AI, how I’m using it, and how I think organizations should be embracing it.

AI For The Individual Contributor

For the IC AI is both amazing and horrifying. On the one hand, it can spit out an insane amount of mostly workable code. On the other hand, it can spit out an insane amount of mostly workable code.

When I first started to play with AI I was throwing it at ambiguous problems and then watching it hallucinate and spiral out of control. And while that’s kind of fun and hilarious, it put a bad taste in my mouth about the first interaction with it. On top of that, as an IC I am drawn to deterministic outputs and LLMs are just not that! Chaotic systems are those that given a very slightly different variance in input give wildly different outputs. AI isn’t really that, but sort of does feel that way. It was challenging to find a reasonable way to use it.

I’ve been using CoPilot for a long time, and I quite liked the small context sensitive suggestions for boilerplate I didn’t want to write. I probably would have killed for CoPilot when I was at Stripe working in Go before generics. There’s only so many for loops to map data from A to B I can write in my life and I’ve long exceeded that amount.

Incorporating something like Claude into my workflow has been a learning curve. What I’ve come away with is it’s great, for me, in two scenarios:

Discovery Engine

LLMs are a fantastic way to explore new topics using NLP as a human interface. I mean thats what LLMs are best at: NLP (natural language processing). I often ask Claude about syntax I don’t know, topics I am learning about, and as a general entrypoint into knowledge systems.

But, and this is the big but, probing ends mostly there. After that I will often try and go to primary documents or other vetted knowledge bases to dig further. LLMs are prone to giving close-but-not-quite answers but that’s kind of OK if you’re trying to frame a mental picture and squint at a fuzzy thing to understand its shape.

I’ve taken to using LLM entrypoints as a first pass for the unknown. The moment I encounter things that are clearly wrong or it’s starting to break down I give up on it. It’s not time well spent to try and get an LLM to give you an answer you want. That’s signal that you don’t know enough to ask or that the information is more nuanced and its back to using your grey matter to figure it out.

LLMs can be an amazing way to facilitate learning and learning faster. But you can’t short circuit actual learning, even if the LLM looks like it can do all the work for you.

As an example of this in a non technical area, my partner and I recently bare boat chartered in Croatia and sailed the Adriatic for a week. We’ve been sailing for 7 years and done a lot of long trips here in the PNW and into the Canadian Gulf Islands, so we’re not strangers to life on a boat. But this was the first time we were planning an overseas adventure. As part of planning I spent a lot of time poring over charts, pilot books, and forums trying to piece together routes, anchorages, and provisioning areas. I was feeling overwhelmed by all the new information and it was hard to figure out what was a good starting point. To help narrow the focus down I opted to try ChatGPT to help me out and at first glance it pulled some great information. I asked it to get me context on prevailing winds, backup routes, weather forecasts, all sorts of stuff I have to do when planning a trip.

While I was impressed with what it came up with it, a lot of it turned out to be nonsense. However, it did give me a great starting point for further analysis and it helped frame my mental model of what was common in the area. When I finally got the plan together it was nothing like what the LLM gave me, but I think this is a good example of using LLMs as discovery engines and probing through ambiguities.

Boilerplate Code Monkey

LLMs are pretty good at writing code, and a lot of it. However, most code in the wild is absolutely fucking awful and lines of code written is probably one of the worst metrics you can ever include as a measure of success. Anyone can write poorly factored, poorly encapsulated, poor architected code. I should know, I spent years doing it as a junior engineer! Well written code is an art and requires deep understanding of the direction you’re going, the systems you’re in, and the trade off’s you’re making.

I’ve had a lot of success using LLMs to do semi-complex work when I direct them with clear examples and the codebase I’m in is resilient to these kinds of changes. What I mean is that code in the codebase is consistent, has lots of good tests and test fixtures, linting, and obviously easy to consume patterns.

In areas of code that are more chaotic, the LLM creates more chaos. Left to its own devices it creates tests that are mocking everything and testing effectively nothing, imagining endpoints that don’t need to exist, special casing workflows, duplicating code it doesn’t know exists already, etc.

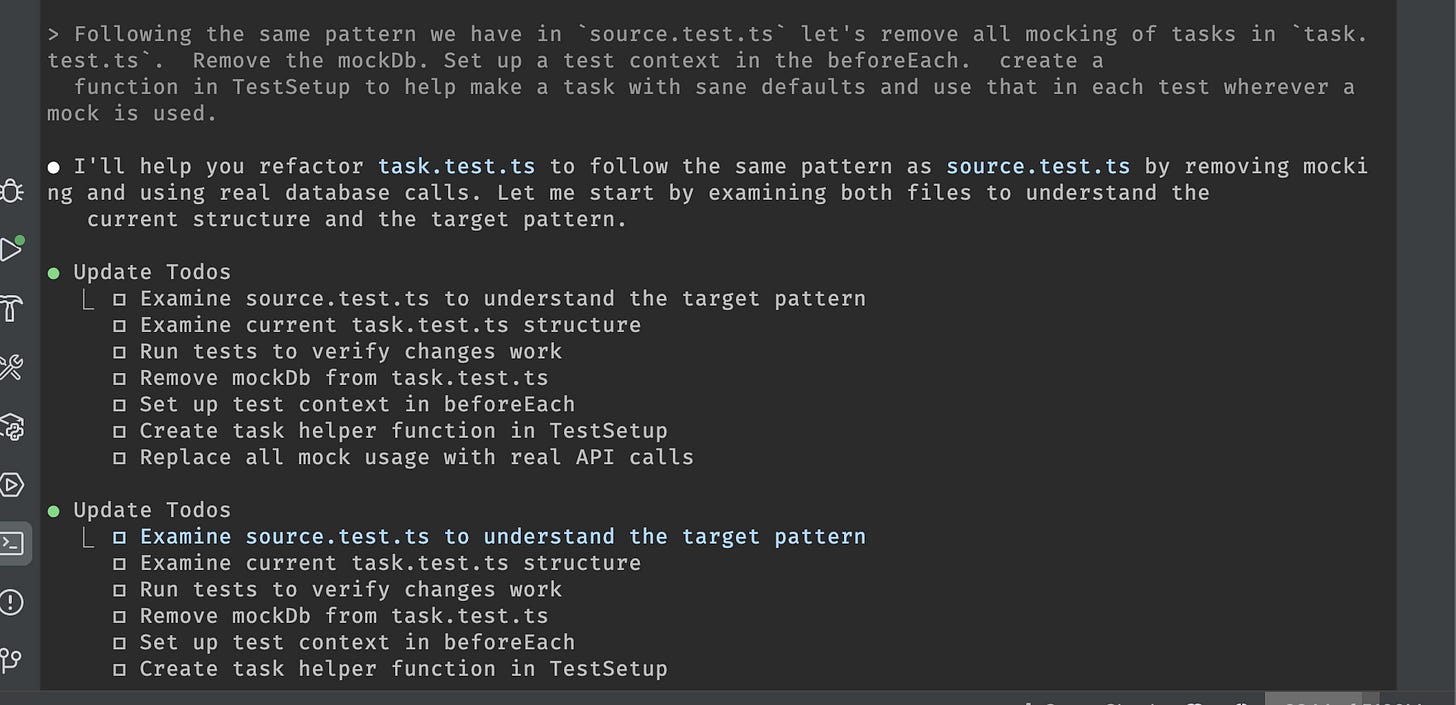

Recently I had a Claude interaction that was very successful for me:

I like these kinds of tasks for an agent. They are reasonably scoped, annoying for a human to automate, and move the needle in a net positive way. However I also at some point tried to use it for a task that would touch hundreds of files and it broke down really quickly.

I know it’s possible to get LLMs to do more but the cost of guiding and reviewing their work isn’t worth it to me. In fact I think most people suck at explaining what they want. I’ve read enough JIRA tickets to know that even knowing the final outcome of an ask is often ambiguous.

I am probably not going to be someone who spends ages customizing agent workflows, creating ultra detailed CLAUDE.md files, etc. Just like I don’t spend a lot of time making vimrc files. I firmly believe tools should be easy to use, after all the easy thing should be the right thing. I’m too lazy I guess. That said, this kind of work, like showcased above is tedious, painful, and I just hate it. Having the LLM do it is actually a massive time saver but it was successful because I did the example that it follows myself. This is kind of no different to how I’d lead a team of people, good patterns propagate and bad patterns propagate too.

It’s critical though that I review all of it’s output and validate that it does the right thing. If I let it write code that I don’t understand then it’s ralph-meme territory.

Pitfalls Of Vibing

Letting LLM’s vibe their way through code is becoming such an issue that new patterns of code engagement are being explored, like knowing if LLMs were used to create code. It makes sense, if you’re reviewing things it does actually matter if someone thought the code through or not. Even if you use an LLM did you actually review it with the same rigor as if you wrote it by hand? I can tell you for sure if I see a PR with 1k+ changes I’m not reading any of that too carefully and I trust a coworker has thought it all through or we’re going to go back to the drawing board.

It’s funny to think that code review as a skill is more important than ever now, and many people struggle with reviewing code. It’s hard, and it takes years to practice. Often code review as a skill only happens when you have a mental model to map things to. I expect code to follow specific shapes, patterns, and similarities. That comes with lots of experience and practice though.

In any setting, reviewing thousands of lines of effectively auto generated code is never going to happen. How many of us have scaffolded a project using some new framework and just ¯\_(ツ)_/¯’d our way through the generated code. We’ve learned a long time ago that small changes are easier to follow, keeping cognitive load low and minimizing bugs.

As IC’s we should be using LLMs in the same way and free ourselves up to focus on the important things. Things like setting up codebases and systems for success. If you don’t know what success is your LLM agents will run rampant making their own decisions for better or worse.

I recently spent some time in a heavily LLM coded codebase and very quickly found inconsistencies that actually slowed me down and led my own agent use down rabbit holes that created more issues than they actually fixed. I had to pull back, refactor, and backtrack to undo a lot of cruft that worked but wasn’t extensible. I was tempted by the vibe-dragon and it bit back.

AI In Products

Just about every company in the world is trying to figure out how to incorporate LLMs into their product, not just their employee workflows.

Lot of new products are coming out that are 100% LLM driven and it will be interesting to see how many of those succeed over time. These companies are leveraging RAG, LangChain, and in general finding ways to break down their problem domain into smaller sub-contexts to have agents excel at and then chain them together to create more interesting flows.

I think using NLP as an interface is really cool and having a Claude style prompt in scenarios where complex onboarding occurs is actually slick as hell. Using LLMs as tiny “nice to haves” in places is a joy. Some good examples are places in systems that ask for some sort of textual boilerplate: “title” or “description” are classic examples. Let some AI slop auto gen that based on other localized context and that’s a loveable moment in any product. I’d be surprised if this wasn’t even baked into new UI frameworks honestly. I worked on an NLP system in 2017 trying to categorize text into groups and it’s funny that we built hand rolled models to do this when an LLM excels super well at this.

However there is a limit to how much people want to interact with a non deterministic engine that starts to hallucinate over time. Eventually people want determinism and control. Adding LLMs on systems that already have well defined APIs and SDKs is a great way to gracefully extend products. Building escape hatches is critical. I almost feel like the escape hatch should be the first way you build things - SDK and API first, and then let LLM’s drive using structured schemas and MCP tools as constraints to your system.

I see this the same way mobile happened. Do you like it when a company ONLY has a mobile app? It can be nice to have a desktop app, or even an in person moment (depending on industry). It’s great when it has a mobile app, but forcing people into specific workflows often kind of sucks.

Customer success has been hit super hard with bots even long before LLMs came out in force but if you’ve ever interacted with an LLM in chat (or even worse voice) the lack of humanity comes out quickly and it is degrading in a moment when you need actual help! I think companies should tread carefully in this space and there is a lot of value in having an actual real human who can help. Weirdly enough this is actually a differentiating feature now.

AI In Organizations

Where I think LLMs shine in an organization is not in replacing anyone but in simplifying the complexity of communication and helping reduce cognitive load for people enabling them to think and work on things that matter the most.

LLMs that summarize project statuses and can provide realtime stats help project managers focus on the inter personal and strategic decisions they actually want to be focusing on.

LLMs that integrate into slack that can create overviews of long text chains helps welcome new people into a complicated conversation and lowers the barrier and improves communication equity. We’ve all seen a 100+ thread and nope’d out because aint nobody got time for that.

LLMs that can act as a souped up first pass triage for inter team questions like a Zapier on steroids is great for lowering team’s necessity to spend hand holding first touch interactions between teams.

There’s endless uses for NLP to remove annoyances. However I firmly believe that these flows don’t replace anyone. If anything they should free people up to think and act and decide on more important things.

As an example, I pasted a draft of this article into Claude and I tried to give it a non biased request for value

And it certainly stroked my ego

But this is a great way of getting information quickly and if I want to dig in more I can.

The 10^n fallacy

We’ve all heard the “everyone is gonna be a 10000x engineer” but in reality I think LLMs make a 10x engineer a 12x engineer by freeing up their time to focus on things that matter.

I think LLMs make a 1x engineer a 0.5x engineer because they aren’t getting better and learning when relying heavily on LLMs. Junior and mid engineers become seniors and staff and principals. And unlike LLMs, humans do learn and get better. Time invested in a person pays off over time. Re prompting an LLM doesn’t.

Even in my example above, if I consistently and only use LLMs to summarize, or write for me, then I’m going to atrophy that muscle. I often mentor my engineers to practice writing and reading comprehension.

There’s been a lot of toxic hype about executives frothing to get rid of all their employees in favor of massive agentic webs, but every organization is only as good as it’s people. This has been true for all time, pre-industrial revolution, post, the computing age, etc. You need to invest in people.

I think organizations are coming around. It was refreshing to hear at least one executive see through the hype veil, but it seems that most executives are taking an extremely heavy micro-managing hand here, which just breeds resentment and skepticism about their actual incentives.

Contrary to how Coinbase handled their AI policy, I think this GitHub post is actually an interesting and healthy approach to AI centric tooling. This snippet is key to me:

Their mistake is treating AI adoption as a technology problem when it is, in fact, a change management problem. Companies fail at AI adoption because they treat it like installing software when it's actually rewiring how people work. The difference between success and failure isn't buying licenses. It's building the human infrastructure that turns skeptical employees into power users.

Places that have created a major sense of distrust failed because it’s unusual for leadership to demand specific tool usage on employees! I’ve never seen it before. As an example, an IDE with semantic refactoring support saves an enormous amount of time and yet nobody has ever looked a vimlord in their dead eyes and told them to :wq! into IntelliJ. I’m curious what incentives are at play that managers and executives who are many levels removed from day to work find it reasonable to dictate specific tooling?

The way to get people to use tools is to show them how it can make them more powerful and find more joy in their work. And the goal isn’t to replace them, it’s to augment them. Sometimes that means answering questions that might be hard to google. Sometimes that means generating unit tests. Sometimes that means upgrading react native, and other times it means writing me a for loop because it’s boring.

That said I think removing engineers from coding is going to backfire. Engineers are good because they know the systems they are in. If an mechanical engineer doesn’t know how torque works, can they design an engine? If an aerospace engineer isn’t living and breathing the thermo coefficients of materials can they actually build a re-entry hull for spacecraft? Abstractions can remove someone from certain levels of work, few of us work in assembly for example. But we still need to know what is going on at all levels and sometimes we do need to drop in that low!

Conclusion

I think LLMs and AI are here to stay but the level at which people interact with them or find them helping in their life varies wildly. For professionals who are using LLMs to speed up their work we need to be disciplined and diligent to not offshore our own brains to the agent. We need to invest in people, like we always have, and let people discover what helps them and embrace those patterns. We need to carve time out for people to improve themselves and deep understanding of things so that they can actual be useful in managing LLMs. If we fall into the fallacy that everyone is working at 100x the speed how are we actually going to manage the 100x output?

We’re at an interesting point in time but in the end all you have is your brain so use it or lose it.